Activity Report 2013

Project-Team STARS

Spatio-Temporal Activity Recognition Systems

RESEARCH CENTER

Sophia Antipolis -Méditerranée

THEME

Vision, perception and multimedia interpretation

Table of contents

1. Members ................................................................................ 1

2. Overall Objectives ........................................................................ 2

2.1. Presentation 2

3.1. Introduction 5

3.2. Perception for Activity Recognition 5

3.2.1. Introduction 5

3.2.2. Appearance models and people tracking 5

3.2.3. Learning shape and motion 6

3.3. Semantic Activity Recognition 6

3.3.1. Introduction 6

3.3.2. High Level Understanding 6

3.3.3. Learning for Activity Recognition 7

3.3.4. Activity Recognition and Discrete Event Systems 7

3.4. Software Engineering for Activity Recognition 7

3.4.1. Platform Architecture for Activity Recognition 8

3.4.2. Discrete Event Models of Activities 9

3.4.3. Model-Driven Engineering for Configuration and Control and Control of Video Surveillance systems 10

4. Application Domains .....................................................................10

4.1. Introduction 10

4.2. Video Analytics 10

4.3. Healthcare Monitoring 11

5. Software and Platforms .................................................................. 11

5.1. SUP 11

5.2. ViSEval 12

5.3. Clem 15

6. New Results ............................................................................. 15

6.1. Introduction 15

6.1.1. Perception for Activity Recognition 15

6.1.2. Semantic Activity Recognition 16

6.1.3. Software Engineering for Activity Recognition 17

6.2. Background Subtraction and People Detection in Videos 17

6.3. Tracking and Video Representation 19

6.4. Video segmentation with shape constraint 20

6.4.1. Video segmentation with growth constraint 20

6.4.2. Video segmentation with statistical shape prior 22

6.5. Articulating motion 25

6.6. Lossless image compression 25

6.7. People detection using RGB-D cameras 27

6.8. Online Tracking Parameter Adaptation based on Evaluation 28

6.9. People Detection, Tracking and Re-identification Through a Video Camera Network 29

6.9.1. People detection: 30

6.9.2. Object tracking: 30

6.9.3. People re-identification: 30

6.10. People Retrieval in a Network of Cameras 30

6.11. Global Tracker : an Online Evaluation Framework to Improve Tracking Quality 32

6.12. Human Action Recognition in Videos 34

6.13. 3D Trajectories for Action Recognition Using Depth Sensors 35

6.14. Unsupervised Sudden Group Movement Discovery for Video Surveillance 35

6.15. Group Behavior Understanding 37

6.16. Evaluation of an Activity Monitoring System for Older People Using Fixed Cameras 39

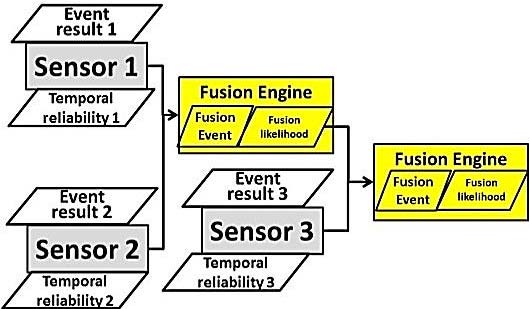

6.17. A Framework for Activity Detection of Older People Using Multiple Sensors 39

6.18. Walking Speed Detection on a Treadmill using an RGB-D Camera 41

6.19. Serious Game for older adults with dementia 42

6.20. Unsupervised Activity Learning and Recognition 44

6.21. Extracting Statistical Information from Videos with Data Mining 46

6.22. SUP 46

6.23. Model-Driven Engineering for Activity Recognition 50

6.23.1. Configuration Adaptation at Run Time 50

6.23.2. Run Time Components 51

6.24. Scenario Analysis Module 52

6.25. The Clem Workflow 52

6.26. Multiple Services for Device Adaptive Platform for Scenario Recognition 53

7. Bilateral Contracts and Grants with Industry ............................................. 53

8. Partnerships and Cooperations ........................................................... 54

8.1. Regional Initiatives 54

8.2. National Initiatives 54

8.2.1. ANR 54

8.2.1.1. MOVEMENT 54

8.2.1.2. SafEE 55

8.2.2. Investment of future 55

8.2.3. Large Scale Inria Initiative 55

8.2.4. Other collaborations 55

8.3. European Initiatives 56

8.3.1. FP7 Projects 56

8.3.1.1. CENTAUR 56

8.3.1.2. SUPPORT 56

8.3.1.3. Dem@Care 56

8.3.1.4. VANAHEIM 57

8.3.2. Collaborations in European Programs, except FP7 57

8.4. International Initiatives 58

8.4.1. Inria International Partners 58

8.4.1.1. Collaborations with Asia 58

8.4.1.2. Collaboration with U.S. 58

8.4.1.3. Collaboration with Europe 58

8.4.2. Participation In other International Programs 58

8.5. International Research Visitors 58

9. Dissemination ........................................................................... 60

9.1. Scientific Animation 60

9.2. Teaching -Supervision -Juries 60

9.2.1. Teaching 60

9.2.2. Supervision 61

9.2.3. Juries 61

9.3. Popularization 61

10. Bibliography ...........................................................................61

Project-Team STARS

Keywords: Perception, Semantics, Machine Learning, Software Engineering, Cognition

Creation of the Team: 2012 January 01, updated into Project-Team: 2013 January 01.

1. Members

Research Scientists

Monique Thonnat [Senior Researcher, Inria, HdR] François Brémond [Team leader, Senior Researcher, Inria, HdR] Guillaume Charpiat [Researcher, Inria] Sabine Moisan [Researcher, Inria, HdR] Annie Ressouche [Researcher, Inria] Daniel Gaffé [Associate Professor, UNS, until Feb 2013, since Sep 2013]

External Collaborators

Etienne Corvée [Linkcare Services, fron Jan 2013] Alexandre Derreumaux [CHU Nice, from Jan 2013] Guido-Tomas Pusiol [Post doc Standfort University, from Mar 2013 until Sep 2013] Jean-Paul Rigault [UNS,from Jan 2013] Qiao Ma [Beihang University, from Jan 2013] Luis-Emiliano Sanchez [Universidad Nacional del Centro de la Provincia de Buenos Aires, from Mar 2013 until Apr 2013] Silviu-Tudor Serban [MB TELECOM LTD. S.R.L. -ROMANIA, from Jan 2013 until Sep 2013] Jean-Yves Tigli [UNS and CNRS-I3S, from Jan 2013]

Engineers

Vasanth Bathrinarayanan [FP7 SUPPORT project, Inria, from Jan 2013] Giuseppe Donatiello [FP7 SUPPORT project, Inria, from Mar 2013] Hervé Falciani [FP7 VANAHEIM project, Inria, from Jan 2013 until Sep 2013] Baptiste Fosty [FP7 VANAHEIM project, Inria, until Jul 2013; CDC AZ@GAME project, Inria, from Aug 2013] Julien Gueytat [FP7 VANAHEIM project, Inria, from Jan 2013 until Jul 2013; FP7 SUPPORT project, Inria, from Aug 2013] Srinidhi Mukanahallipatna Simha [AE PAL, Inria, until Jul 2013] Jacques Serlan [Inria, from Nov 2013]

PhD Students

Malik Souded [CIFFRE Keeneo since Jun 2013; AE PAL from Jul 2013 until Nov 2013; FP7 SUPPORT, Inria, from Dec 2013] Julien Badie [FP7 DEM@CARE project, Inria] Carolina Garate Oporto [FP7 VANAHEIM project, Inria] Minh Khue Phan Tran [CIFRE, Genious, from May 2013] Ratnesh Kumar [FP7 DEM@CARE project, Inria] Rim Romdhane [Inria, Inria, until Sep 2013] Piotr Tadeusz Bilinski [FP7 SUPPORT project, Inria, until Feb 2013]

Post-Doctoral Fellows

Slawomir Bak [DGCIS PANORAMA project, Inria] Duc Phu Chau [FP7 VANAHEIM project, Inria; DGCIS PANORAMA project, Inria] Carlos-Fernando Crispim Junior [FP7 DEM@CARE project, Inria] Anh-Tuan Nghiem [CDC AZ@GAME project, Inria] Sofia Zaidenberg [FP7 VANAHEIM project, Inria, until Jul 2013; w Sep 2013 until Oct 2013]

Salma Zouaoui-Elloumi [FP7 VANAHEIM project, Inria, until Jun 2013; FP7 SUPPORT from Jul 2013] Serhan Cosar [FP7 SUPPORT project, Inria, from Mar 2013]

Visiting Scientists

Vít Libal [Honeywell Praha, from Jul 2013 until Oct 2013] Marco San Biagio [Italian Inst. of Tech. of Genova, from Apr 2013 until Sep 2013] Kartick Subramanian [Nanyang Technological University, from Mar 2013 until Aug 2013]

Administrative Assistant

Jane Desplanques [Inria]

Others

Michal Koperski [;FP7 EIT ICT LABS GA project, Inria, from Apr 2013 until Sep 2013; CDC TOYOTA project, Inria, from Oct 2013] Imad Rida [FP7 EIT ICT LABS GA project, Inria, from Mar 2013 until Aug 2013] Abhineshwar Tomar [FP7 DEM@CARE project, Inria, until Apr 2013] Vaibhav Katiyar [FP7 SUPPORT project, Asian Institute of Technology Khlong Luang Pathumtani, Thailand, until Jan 2013] Mohammed Cherif Bergheul [EGIDE, Inria, from Apr 2013 until Sep 2013] Stefanus Candra [EGIDE, Inria, from Aug 2013 until Dec 2013] Agustín Caverzasi [EGIDE, Inria, from Aug 2013] Sahil Dhawan [EGIDE, Inria, from Jan 2013 until Jul 2013] Narjes Ghrairi [EGIDE, Inria, from Apr 2013 until Sep 2013] Joel Wanza Weloli [EGIDE, Inria, from Jun 2013 until Aug 2013]

2. Overall Objectives

2.1. Presentation

2.1.1. Research Themes

STARS (Spatio-Temporal Activity Recognition Systems) is focused on the design of cognitive systems for Activity Recognition. We aim at endowing cognitive systems with perceptual capabilities to reason about an observed environment, to provide a variety of services to people living in this environment while preserving their privacy. In today world, a huge amount of new sensors and new hardware devices are currently available, addressing potentially new needs of the modern society. However the lack of automated processes (with no human interaction) able to extract a meaningful and accurate information (i.e. a correct understanding of the situation) has often generated frustrations among the society and especially among older people. Therefore, Stars objective is to propose novel autonomous systems for the real-time semantic interpretation of dynamic scenes observed by sensors. We study long-term spatio-temporal activities performed by several interacting agents such as human beings, animals and vehicles in the physical world. Such systems also raise fundamental software engineering problems to specify them as well as to adapt them at run time.

We propose new techniques at the frontier between computer vision, knowledge engineering, machine learning and software engineering. The major challenge in semantic interpretation of dynamic scenes is to bridge the gap between the task dependent interpretation of data and the flood of measures provided by sensors. The problems we address range from physical object detection, activity understanding, activity learning to vision system design and evaluation. The two principal classes of human activities we focus on, are assistance to older adults and video analytics.

A typical example of a complex activity is shown in Figure 1 and Figure 2 for a homecare application. In this example, the duration of the monitoring of an older person apartment could last several months. The activities involve interactions between the observed person and several pieces of equipment. The application goal is to recognize the everyday activities at home through formal activity models (as shown in Figure 3) and data captured by a network of sensors embedded in the apartment. Here typical services include an objective assessment of the frailty level of the observed person to be able to provide a more personalized care and to monitor the effectiveness of a prescribed therapy. The assessment of the frailty level is performed by an Activity Recognition System which transmits a textual report (containing only meta-data) to the general practitioner who follows the older person. Thanks to the recognized activities, the quality of life of the observed people can thus be improved and their personal information can be preserved.

Figure 1. Homecare monitoring: the set of sensors embedded in an apartment

Figure 2. Homecare monitoring: the different views of the apartment captured by 4 video cameras

The ultimate goal is for cognitive systems to perceive and understand their environment to be able to provide appropriate services to a potential user. An important step is to propose a computational representation of people activities to adapt these services to them. Up to now, the most effective sensors have been video cameras due to the rich information they can provide on the observed environment. These sensors are currently perceived as intrusive ones. A key issue is to capture the pertinent raw data for adapting the services to the people while preserving their privacy. We plan to study different solutions including of course the local processing of the data without transmission of images and the utilisation of new compact sensors developed

Activity (PrepareMeal, PhysicalObjects( (p : Person), (z : Zone), (eq : Equipment)) Components( (s_inside : InsideKitchen(p, z))

(s_close : CloseToCountertop(p, eq)) (s_stand : PersonStandingInKitchen(p, z)))

Constraints( (z->Name = Kitchen) (eq->Name = Countertop) (s_close->Duration >= 100) (s_stand->Duration >= 100))

Annotation( AText("prepare meal") AType("not urgent")))

Figure 3. Homecare monitoring: example of an activity model describing a scenario related to the preparation of a meal with a high-level language

for interaction (also called RGB-Depth sensors, an example being the Kinect) or networks of small non visual

sensors.

2.1.2. International and Industrial Cooperation

Our work has been applied in the context of more than 10 European projects such as COFRIEND, ADVISOR, SERKET, CARETAKER, VANAHEIM, SUPPORT, DEM@CARE, VICOMO. We had or have industrial collaborations in several domains: transportation (CCI Airport Toulouse Blagnac, SNCF, Inrets, Alstom, Ratp, GTT (Italy), Turin GTT (Italy)), banking (Crédit Agricole Bank Corporation, Eurotelis and Ciel), security (Thales R&T FR, Thales Security Syst, EADS, Sagem, Bertin, Alcatel, Keeneo), multimedia (Multitel (Belgium), Thales Communications, Idiap (Switzerland)), civil engineering (Centre Scientifique et Technique du Bâtiment (CSTB)), computer industry (BULL), software industry (AKKA), hardware industry (ST-Microelectronics) and health industry (Philips, Link Care Services, Vistek).

We have international cooperations with research centers such as Reading University (UK), ENSI Tunis (Tunisia), National Cheng Kung University, National Taiwan University (Taiwan), MICA (Vietnam), IPAL, I2R (Singapore), University of Southern California, University of South Florida, University of Maryland (USA).

2.2. Highlights of the Year

Stars designs cognitive vision systems for activity recognition based on sound software engineering paradigms.

During this period, we have designed several novel algorithms for activity recognition systems. In particular,

we have extended an efficient algorithm for tuning automatically the parameters of the people tracking algorithm. We have designed a compact system for activity recognition running on a mini-PC which is easily deployable

using RGBD video cameras. This algorithm has been tested on more than 70 videos of older adults performing 15 min of physical exercises and cognitive tasks. This evaluation has been part of a large clinical trial with Nice Hospital to characterize the behaviour profile of Alzheimer patients compared to healthy older people.

We have also been able to demonstrate the tracking and the recognition of group behaviours in live in Paris subway. We have stored efficiently in a huge database the meta-data (e.g. people trajectories) generated from the processing of 8 video cameras, each of them lasting several days. From these meta-data, we have automatically discovered few hundreds of rare events, such as loitering, collapsing, ... to display them on the screen of subway security operators.

Monique Thonnat has been at the head of the Inria Bordeaux Center since the first of November 2013. She is

still working part-time in Stars team.

3. Research Program

3.1. Introduction

Stars follows three main research directions: perception for activity recognition, semantic activity recognition, and software engineering for activity recognition. These three research directions are interleaved: the software architecture direction provides new methodologies for building safe activity recognition systems and the perception and the semantic activity recognition directions provide new activity recognition techniques which are designed and validated for concrete video analytics and healthcare applications. Conversely, these concrete systems raise new software issues that enrich the software engineering research direction.

Transversally, we consider a new research axis in machine learning, combining a priori knowledge and learning techniques, to set up the various models of an activity recognition system. A major objective is to automate model building or model enrichment at the perception level and at the understanding level.

3.2. Perception for Activity Recognition

Participants: Guillaume Charpiat, François Brémond, Sabine Moisan, Monique Thonnat.

Computer Vision; Cognitive Systems; Learning; Activity Recognition.

3.2.1. Introduction

Our main goal in perception is to develop vision algorithms able to address the large variety of conditions characterizing real world scenes in terms of sensor conditions, hardware requirements, lighting conditions, physical objects, and application objectives. We have also several issues related to perception which combine machine learning and perception techniques: learning people appearance, parameters for system control and shape statistics.

3.2.2. Appearance models and people tracking

An important issue is to detect in real-time physical objects from perceptual features and predefined 3D models. It requires finding a good balance between efficient methods and precise spatio-temporal models. Many improvements and analysis need to be performed in order to tackle the large range of people detection scenarios.

Appearance models. In particular, we study the temporal variation of the features characterizing the appearance of a human. This task could be achieved by clustering potential candidates depending on their position and their reliability. This task can provide any people tracking algorithms with reliable features allowing for instance to (1) better track people or their body parts during occlusion, or to (2) model people appearance for re-identification purposes in mono and multi-camera networks, which is still an open issue. The underlying challenge of the person re-identification problem arises from significant differences in illumination, pose and camera parameters. The re-identification approaches have two aspects: (1) establishing correspondences between body parts and (2) generating signatures that are invariant to different color responses. As we have already several descriptors which are color invariant, we now focus more on aligning two people detections and on finding their corresponding body parts. Having detected body parts, the approach can handle pose variations. Further, different body parts might have different influence on finding the correct match among a whole gallery dataset. Thus, the re-identification approaches have to search for matching strategies. As the results of the re-identification are always given as the ranking list, re-identification focuses on learning to rank. "Learning to rank" is a type of machine learning problem, in which the goal is to automatically construct a ranking model from a training data.

Therefore, we work on information fusion to handle perceptual features coming from various sensors (several cameras covering a large scale area or heterogeneous sensors capturing more or less precise and rich information). New 3D RGB-D sensors are also investigated, to help in getting an accurate segmentation for specific scene conditions.

Long term tracking. For activity recognition we need robust and coherent object tracking over long periods of time (often several hours in videosurveillance and several days in healthcare). To guarantee the long term coherence of tracked objects, spatio-temporal reasoning is required. Modelling and managing the uncertainty of these processes is also an open issue. In Stars we propose to add a reasoning layer to a classical Bayesian framework1pt modelling the uncertainty of the tracked objects. This reasoning layer can take into account the a priori knowledge of the scene for outlier elimination and long-term coherency checking.

Controling system parameters. Another research direction is to manage a library of video processing programs. We are building a perception library by selecting robust algorithms for feature extraction, by insuring they work efficiently with real time constraints and by formalizing their conditions of use within a program supervision model. In the case of video cameras, at least two problems are still open: robust image segmentation and meaningful feature extraction. For these issues, we are developing new learning techniques.

3.2.3. Learning shape and motion

Another approach, to improve jointly segmentation and tracking, is to consider videos as 3D volumetric data and to search for trajectories of points that are statistically coherent both spatially and temporally. This point of view enables new kinds of statistical segmentation criteria and ways to learn them.

We are also using the shape statistics developed in [5] for the segmentation of images or videos with shape prior, by learning local segmentation criteria that are suitable for parts of shapes. This unifies patchbased detection methods and active-contour-based segmentation methods in a single framework. These shape statistics can be used also for a fine classification of postures and gestures, in order to extract more precise information from videos for further activity recognition. In particular, the notion of shape dynamics has to be studied.

More generally, to improve segmentation quality and speed, different optimization tools such as graph-cuts can be used, extended or improved.

3.3. Semantic Activity Recognition

Participants: Guillaume Charpiat, François Brémond, Sabine Moisan, Monique Thonnat.

Activity Recognition, Scene Understanding,Computer Vision

3.3.1. Introduction

Semantic activity recognition is a complex process where information is abstracted through four levels: signal (e.g. pixel, sound), perceptual features, physical objects and activities. The signal and the feature levels are characterized by strong noise, ambiguous, corrupted and missing data. The whole process of scene understanding consists in analysing this information to bring forth pertinent insight of the scene and its dynamics while handling the low level noise. Moreover, to obtain a semantic abstraction, building activity models is a crucial point. A still open issue consists in determining whether these models should be given a priori or learned. Another challenge consists in organizing this knowledge in order to capitalize experience, share it with others and update it along with experimentation. To face this challenge, tools in knowledge engineering such as machine learning or ontology are needed.

Thus we work along the two following research axes: high level understanding (to recognize the activities of physical objects based on high level activity models) and learning (how to learn the models needed for activity recognition).

3.3.2. High Level Understanding

A challenging research axis is to recognize subjective activities of physical objects (i.e. human beings, animals, vehicles) based on a priori models and objective perceptual measures (e.g. robust and coherent object tracks).

To reach this goal, we have defined original activity recognition algorithms and activity models. Activity recognition algorithms include the computation of spatio-temporal relationships between physical objects. All the possible relationships may correspond to activities of interest and all have to be explored in an efficient way. The variety of these activities, generally called video events, is huge and depends on their spatial and temporal granularity, on the number of physical objects involved in the events, and on the event complexity (number of components constituting the event).

Concerning the modelling of activities, we are working towards two directions: the uncertainty management for representing probability distributions and knowledge acquisition facilities based on ontological engineering techniques. For the first direction, we are investigating classical statistical techniques and logical approaches. We have also built a language for video event modelling and a visual concept ontology (including color, texture and spatial concepts) to be extended with temporal concepts (motion, trajectories, events ...) and other perceptual concepts (physiological sensor concepts ...).

3.3.3. Learning for Activity Recognition

Given the difficulty of building an activity recognition system with a priori knowledge for a new application, we study how machine learning techniques can automate building or completing models at the perception level and at the understanding level.

At the understanding level, we are learning primitive event detectors. This can be done for example by learning visual concept detectors using SVMs (Support Vector Machines) with perceptual feature samples. An open question is how far can we go in weakly supervised learning for each type of perceptual concept

(i.e. leveraging the human annotation task). A second direction is to learn typical composite event models for frequent activities using trajectory clustering or data mining techniques. We name composite event a particular combination of several primitive events.

3.3.4. Activity Recognition and Discrete Event Systems

The previous research axes are unavoidable to cope with the semantic interpretations. However they tend to let aside the pure event driven aspects of scenario recognition. These aspects have been studied for a long time at a theoretical level and led to methods and tools that may bring extra value to activity recognition, the most important being the possibility of formal analysis, verification and validation.

We have thus started to specify a formal model to define, analyze, simulate, and prove scenarios. This model deals with both absolute time (to be realistic and efficient in the analysis phase) and logical time (to benefit from well-known mathematical models providing re-usability, easy extension, and verification). Our purpose is to offer a generic tool to express and recognize activities associated with a concrete language to specify activities in the form of a set of scenarios with temporal constraints. The theoretical foundations and the tools being shared with Software Engineering aspects, they will be detailed in section 3.4.

The results of the research performed in perception and semantic activity recognition (first and second research directions) produce new techniques for scene understanding and contribute to specify the needs for new software architectures (third research direction).

3.4. Software Engineering for Activity Recognition

Participants: Sabine Moisan, Annie Ressouche, Jean-Paul Rigault, François Brémond.

Software Engineering, Generic Components, Knowledge-based Systems, Software Component Platform,

Object-oriented Frameworks, Software Reuse, Model-driven Engineering The aim of this research axis is to build general solutions and tools to develop systems dedicated to activity recognition. For this, we rely on state-of-the art Software Engineering practices to ensure both sound design and easy use, providing genericity, modularity, adaptability, reusability, extensibility, dependability, and maintainability.

This research requires theoretical studies combined with validation based on concrete experiments conducted in Stars. We work on the following three research axes: models (adapted to the activity recognition domain), platform architecture (to cope with deployment constraints and run time adaptation), and system verification (to generate dependable systems). For all these tasks we follow state of the art Software Engineering practices and, if needed, we attempt to set up new ones.

3.4.1. Platform Architecture for Activity Recognition

Figure 4. Global Architecture of an Activity Recognition The grey areas contain software engineering support modules whereas the other modules correspond to software components (at Task and Component levels) or to generated systems (at Application level).

In the former project teams Orion and Pulsar, we have developed two platforms, one (VSIP), a library of real-time video understanding modules and another one, LAMA [15], a software platform enabling to design not only knowledge bases, but also inference engines, and additional tools. LAMA offers toolkits to build and to adapt all the software elements that compose a knowledge-based system or a cognitive system.

Figure 4 presents our conceptual vision for the architecture of an activity recognition platform. It consists of three levels:

• The Component Level, the lowest one, offers software components providing elementary operations and data for perception, understanding, and learning.

- –

- Perception components contain algorithms for sensor management, image and signal analysis, image and video processing (segmentation, tracking...), etc.

- –

- Understanding components provide the building blocks for Knowledge-based Systems: knowledge representation and management, elements for controlling inference engine strategies, etc.

- –

- Learning components implement different learning strategies, such as Support Vector

Machines (SVM), Case-based Learning (CBL), clustering, etc. An Activity Recognition system is likely to pick components from these three packages. Hence, tools must be provided to configure (select, assemble), simulate, verify the resulting component combination. Other support tools may help to generate task or application dedicated languages or graphic interfaces.

- The Task Level, the middle one, contains executable realizations of individual tasks that will collaborate in a particular final application. Of course, the code of these tasks is built on top of the components from the previous level. We have already identified several of these important tasks: Object Recognition, Tracking, Scenario Recognition... In the future, other tasks will probably enrich this level.

- For these tasks to nicely collaborate, communication and interaction facilities are needed. We shall also add MDE-enhanced tools for configuration and run-time adaptation.

- The Application Level integrates several of these tasks to build a system for a particular type of application, e.g., vandalism detection, patient monitoring, aircraft loading/unloading surveillance, etc.. Each system is parametrized to adapt to its local environment (number, type, location of sensors, scene geometry, visual parameters, number of objects of interest...). Thus configuration and deployment facilities are required.

The philosophy of this architecture is to offer at each level a balance between the widest possible genericity

and the maximum effective reusability, in particular at the code level. To cope with real application requirements, we shall also investigate distributed architecture, real time implementation, and user interfaces.

Concerning implementation issues, we shall use when possible existing open standard tools such as NuSMV for model-checking, Eclipse for graphic interfaces or model engineering support, Alloy for constraint representation and SAT solving, etc. Note that, in Figure 4, some of the boxes can be naturally adapted from SUP existing elements (many perception and understanding components, program supervision, scenario recognition...) whereas others are to be developed, completely or partially (learning components, most support and configuration tools).

3.4.2. Discrete Event Models of Activities

As mentioned in the previous section (3.3) we have started to specify a formal model of scenario dealing with both absolute time and logical time. Our scenario and time models as well as the platform verification tools rely on a formal basis, namely the synchronous paradigm. To recognize scenarios, we consider activity descriptions as synchronous reactive systems and we apply general modelling methods to express scenario behaviour.

Activity recognition systems usually exhibit many safeness issues. From the software engineering point of view we only consider software security. Our previous work on verification and validation has to be pursued; in particular, we need to test its scalability and to develop associated tools. Model-checking is an appealing technique since it can be automatized and helps to produce a code that has been formally proved. Our verification method follows a compositional approach, a well-known way to cope with scalability problems in model-checking.

Moreover, recognizing real scenarios is not a purely deterministic process. Sensor performance, precision of image analysis, scenario descriptions may induce various kinds of uncertainty. While taking into account this uncertainty, we should still keep our model of time deterministic, modular, and formally verifiable. To formally describe probabilistic timed systems, the most popular approach involves probabilistic extension of timed automata. New model checking techniques can be used as verification means, but relying on model checking techniques is not sufficient. Model checking is a powerful tool to prove decidable properties but introducing uncertainty may lead to infinite state or even undecidable properties. Thus model checking validation has to be completed with non exhaustive methods such as abstract interpretation.

3.4.3. Model-Driven Engineering for Configuration and Control and Control of Video Surveillance systems

Model-driven engineering techniques can support the configuration and dynamic adaptation of video surveillance systems designed with our SUP activity recognition platform. The challenge is to cope with the many—functional as well as nonfunctional—causes of variability both in the video application specification and in the concrete SUP implementation. We have used feature models to define two models: a generic model of video surveillance applications and a model of configuration for SUP components and chains. Both of them express variability factors. Ultimately, we wish to automatically generate a SUP component assembly from an application specification, using models to represent transformations [57]. Our models are enriched with intra-and inter-models constraints. Inter-models constraints specify models to represent transformations. Feature models are appropriate to describe variants; they are simple enough for video surveillance experts to express their requirements. Yet, they are powerful enough to be liable to static analysis [75]. In particular, the constraints can be analysed as a SAT problem.

An additional challenge is to manage the possible run-time changes of implementation due to context variations (e.g., lighting conditions, changes in the reference scene, etc.). Video surveillance systems have to dynamically adapt to a changing environment. The use of models at run-time is a solution. We are defining adaptation rules corresponding to the dependency constraints between specification elements in one model and software variants in the other [56], [ 85 ], [78].

4. Application Domains

4.1. Introduction

While in our research the focus is to develop techniques, models and platforms that are generic and reusable, we also make effort in the development of real applications. The motivation is twofold. The first is to validate the new ideas and approaches we introduce. The second is to demonstrate how to build working systems for real applications of various domains based on the techniques and tools developed. Indeed, Stars focuses on two main domains: video analytics and healthcare monitoring.

4.2. Video Analytics

Our experience in video analytics [7], [ 1 ], [9] (also referred to as visual surveillance) is a strong basis which ensures both a precise view of the research topics to develop and a network of industrial partners ranging from end-users, integrators and software editors to provide data, objectives, evaluation and funding.

For instance, the Keeneo start-up was created in July 2005 for the industrialization and exploitation of Orion and Pulsar results in video analytics (VSIP library, which was a previous version of SUP). Keeneo has been bought by Digital Barriers in August 2011 and is now independent from Inria. However, Stars continues to maintain a close cooperation with Keeneo for impact analysis of SUP and for exploitation of new results.

Moreover new challenges are arising from the visual surveillance community. For instance, people detection and tracking in a crowded environment are still open issues despite the high competition on these topics. Also detecting abnormal activities may require to discover rare events from very large video data bases often characterized by noise or incomplete data.

4.3. Healthcare Monitoring

We have initiated a new strategic partnership (called CobTek) with Nice hospital [66], [ 86 ] (CHU Nice, Prof P. Robert) to start ambitious research activities dedicated to healthcare monitoring and to assistive technologies. These new studies address the analysis of more complex spatio-temporal activities (e.g. complex interactions, long term activities).

To achieve this objective, several topics need to be tackled. These topics can be summarized within two points: finer activity description and longer analysis. Finer activity description is needed for instance, to discriminate the activities (e.g. sitting, walking, eating) of Alzheimer patients from the ones of healthy older people. It is essential to be able to pre-diagnose dementia and to provide a better and more specialised care. Longer analysis is required when people monitoring aims at measuring the evolution of patient behavioural disorders. Setting up such long experimentation with dementia people has never been tried before but is necessary to have real-world validation. This is one of the challenge of the European FP7 project Dem@Care where several patient homes should be monitored over several months.

For this domain, a goal for Stars is to allow people with dementia to continue living in a self-sufficient manner in their own homes or residential centers, away from a hospital, as well as to allow clinicians and caregivers remotely proffer effective care and management. For all this to become possible, comprehensive monitoring of the daily life of the person with dementia is deemed necessary, since caregivers and clinicians will need a comprehensive view of the person’s daily activities, behavioural patterns, lifestyle, as well as changes in them, indicating the progression of their condition.

The development and ultimate use of novel assistive technologies by a vulnerable user group such as individuals with dementia, and the assessment methodologies planned by Stars are not free of ethical, or even legal concerns, even if many studies have shown how these Information and Communication Technologies (ICT) can be useful and well accepted by older people with or without impairments. Thus one goal of Stars team is to design the right technologies that can provide the appropriate information to the medical carers while preserving people privacy. Moreover, Stars will pay particular attention to ethical, acceptability, legal and privacy concerns that may arise, addressing them in a professional way following the corresponding established EU and national laws and regulations, especially when outside France.

As presented in 3.1, Stars aims at designing cognitive vision systems with perceptual capabilities to monitor efficiently people activities. As a matter of fact, vision sensors can be seen as intrusive ones, even if no images are acquired or transmitted (only meta-data describing activities need to be collected). Therefore new communication paradigms and other sensors (e.g. accelerometers, RFID, and new sensors to come in the future) are also envisaged to provide the most appropriate services to the observed people, while preserving their privacy. To better understand ethical issues, Stars members are already involved in several ethical organizations. For instance, F. Bremond has been a member of the ODEGAM -“Commission Ethique et Droit” (a local association in Nice area for ethical issues related to older people) from 2010 to 2011 and a member of the French scientific council for the national seminar on “La maladie d’Alzheimer et les nouvelles technologies -Enjeux éthiques et questions de société” in 2011. This council has in particular proposed a chart and guidelines for conducting researches with dementia patients.

For addressing the acceptability issues, focus groups and HMI (Human Machine Interaction) experts, will be consulted on the most adequate range of mechanisms to interact and display information to older people.

5. Software and Platforms

5.1. SUP

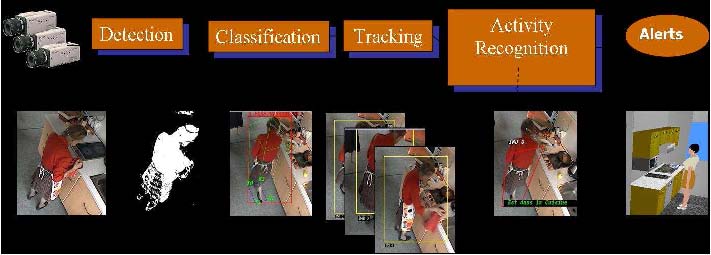

Figure 5. Tasks of the Scene Understanding Platform (SUP).

SUP is a Scene Understanding Software Platform written in C and C++ (see Figure 5). SUP is the continuation of the VSIP platform. SUP is splitting the workflow of a video processing into several modules, such as acquisition, segmentation, etc., up to activity recognition, to achieve the tasks (detection, classification, etc.) the platform supplies. Each module has a specific interface, and different plugins implementing these interfaces can be used for each step of the video processing. This generic architecture is designed to facilitate:

- integration of new algorithms in SUP;

- sharing of the algorithms among the Stars team.

Currently, 15 plugins are available, covering the whole processing chain. Several plugins are using the Genius platform, an industrial platform based on VSIP and exploited by Keeneo. Goals of SUP are twofold:

- From a video understanding point of view, to allow the Stars researchers sharing the implementation of their work through this platform.

- From a software engineering point of view, to integrate the results of the dynamic management of vision applications when applied to video analytics.

5.2. ViSEval

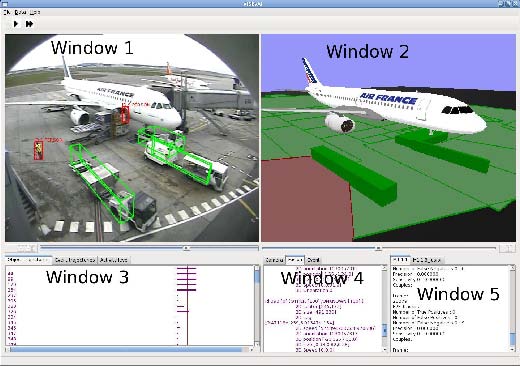

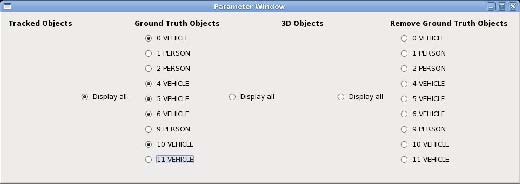

ViSEval is a software dedicated to the evaluation and visualization of video processing algorithm outputs. The evaluation of video processing algorithm results is an important step in video analysis research. In video processing, we identify 4 different tasks to evaluate: detection, classification and tracking of physical objects of interest and event recognition.

The proposed evaluation tool (ViSEvAl, visualization and evaluation) respects three important properties:

- To be able to visualize the algorithm results.

- To be able to visualize the metrics and evaluation results.

• For users to easily modify or add new metrics. The ViSEvAl tool is composed of two parts: a GUI to visualize results of the video processing algorithms and metrics results, and an evaluation program to evaluate automatically algorithm outputs on large amount of data. An XML format is defined for the different input files (detected objects from one or several cameras, groundtruth and events). XSD files and associated classes are used to check, read and write automatically the different

XML files. The design of the software is based on a system of interfaces-plugins. This architecture allows the user to develop specific treatments according to her/his application (e.g. metrics). There are 6 interfaces:

- The video interface defines the way to load the images in the interface. For instance the user can develop her/his plugin based on her/his own video format. The tool is delivered with a plugin to load JPEG image, and ASF video.

- The object filter selects which objects (e.g. objects far from the camera) are processed for the evaluation. The tool is delivered with 3 filters.

- The distance interface defines how the detected objects match the ground-truth objects based on their bounding box. The tool is delivered with 3 plugins comparing 2D bounding boxes and 3 plugins comparing 3D bounding boxes.

- The frame metric interface implements metrics (e.g. detection metric, classification metric, ...) which can be computed on each frame of the video. The tool is delivered with 5 frame metrics.

- The temporal metric interface implements metrics (e.g. tracking metric,...) which are computed on the whole video sequence. The tool is delivered with 3 temporal metrics.

- The event metric interface implements metrics to evaluate the recognized events. The tool provides 4 metrics.

Figure 6. GUI of the ViSEvAl software

The GUI is composed of 3 different parts:

1. The widows dedicated to result visualization (see Figure 6):

– Window 1: the video window displays the current image and information about the detected and ground-truth objects (bounding-boxes, identifier, type,...).

Figure 7. The object window enables users to choose the object to display

Figure 8. The multi-view window

- –

- Window 2: the 3D virtual scene displays a 3D view of the scene (3D avatars for the detected and ground-truth objects, context, ...).

- –

- Window 3: the temporal information about the detected and ground truth objects, and about the recognized and ground-truth events.

- –

- Window 4: the description part gives detailed information about the objects and the events,

- –

- Window 5: the metric part shows the evaluation results of the frame metrics.

- The object window enables the user to choose the object to be displayed (see Figure 7).

- The multi-view window displays the different points of view of the scene (see Figure 8).

The evaluation program saves, in a text file, the evaluation results of all the metrics for each frame (whenever it is appropriate), globally for all video sequences or for each object of the ground truth. The ViSEvAl software was tested and validated into the context of the Cofriend project through its partners

(Akka,...). The tool is also used by IMRA, Nice hospital, Institute for Infocomm Research (Singapore),... The software version 1.0 was delivered to APP (French Program Protection Agency) on August 2010. ViSEvAl is under GNU Affero General Public License AGPL (http://www.gnu.org/licenses/) since July 2011. The tool is available on the web page : http://www-sop.inria.fr/teams/pulsar/EvaluationTool/ViSEvAl_Description.html

5.3. Clem

The Clem Toolkit [68](see Figure 9) is a set of tools devoted to design, simulate, verify and generate code for LE [19] [ 82 ] programs. LE is a synchronous language supporting a modular compilation. It also supports automata possibly designed with a dedicated graphical editor.

Each LE program is compiled later into lec and lea files. Then when we want to generate code for different backends, depending on their nature, we can either expand the lec code of programs in order to resolve all abstracted variables and get a single lec file, or we can keep the set of lec files where all the variables of the main program are defined. Then, the finalization will simplify the final equations and code is generated for simulation, safety proofs, hardware description or software code. Hardware description (Vhdl) and software code (C) are supplied for LE programs as well as simulation. Moreover, we also generate files to feed the NuSMV model checker [65] in order to perform validation of program behaviors.

6. New Results

6.1. Introduction

This year Stars has proposed new algorithms related to its three main research axes : perception for activity recognition, semantic activity recognition and software engineering for activity recognition.

6.1.1. Perception for Activity Recognition

Participants: Julien Badie, Slawomir Bak, Vasanth Bathrinarayanan, Piotr Bilinski, François Brémond, Guillaume Charpiat, Duc Phu Chau, Etienne Corvée, Carolina Garate, Vaibhav Katiyar, Ratnesh Kumar, Srinidhi Mukanahallipatna, Marco San Biago, Silviu Serban, Malik Souded, Kartick Subramanian, Anh Tuan Nghiem, Monique Thonnat, Sofia Zaidenberg.

This year Stars has extended an algorithm for tuning automatically the parameters of the people tracking algorithm. We have evaluated the algorithm for re-identification of people through a camera network while taking into account a large variety of potential features together with practical constraints. We have designed several original algorithms for the recognition of short actions and validated its performance on several benchmarking databases (e.g. ADL). We have also worked on video segmentation and representation, with different approaches and applications.

Figure 9. The Clem Toolkit

More precisely, the new results for perception for activity recognition concern:

- Background Subtraction and People Detection in Videos (6.2)

- Tracking and Video Representation (6.3)

- Video segmentation with shape constraint (6.4)

- Articulating motion (6.5)

- Lossless image compression (6.6)

- People detection using RGB-D cameras (6.7)

- Online Tracking Parameter Adaptation based on Evaluation (6.8)

- People Detection, Tracking and Re-identification Through a Video Camera Network (6.9)

- People Retrieval in a Network of Cameras (6.10)

- Global Tracker : an Online Evaluation Framework to Improve Tracking Quality (6.11)

- Human Action Recognition in Videos (6.12)

- 3D Trajectories for Action Recognition Using Depth Sensors (6.13)

- Unsupervised Sudden Group Movement Discovery for Video Surveillance (6.14)

- Group Behavior Understanding (6.15)

6.1.2. Semantic Activity Recognition

Participants: Guillaume Charpiat, Serhan Cosar, Carlos -Fernando Crispim Junior, Hervé Falciani, Baptiste Fosty, Qiao Ma, Rim Romdhane.

During this period, we have thoroughly evaluated the generic event recognition algorithm using both sensors (RGB and RGBD video cameras). This algorithm has been tested on more than 70 videos of older adults performing 15 min of physical exercises and cognitive tasks. In Paris subway, we have been able to demonstrate the recognition in live of group behaviours. We have also been able to store the meta-data (e.g. people trajectories) generated from the processing of 8 video cameras, each of them lasting 2 or 3 days. From these meta-data, we have automatically discovered few hundreds of rare events, such as loitering, collapsing, ... to display on the screen of subway security operators.

Concerning semantic activity recognition, the contributions are :

- Evaluation of an Activity Monitoring System for Older People Using Fixed Cameras (6.16)

- A Framework for Activity Detection of Older People Using Multiple Sensors (6.17)

- Walking Speed Detection on a Treadmill using an RGB-D Camera (6.18)

- Serious Game for older adults with dementia (6.19)

- Unsupervised Activity Learning and Recognition (6.20)

- Extracting Statistical Information from Videos with Data Mining (6.21)

6.1.3. Software Engineering for Activity Recognition

Participants: François Brémond, Daniel Gaffé, Julien Gueytat, Sabine Moisan, Anh Tuan Nghiem, Annie Ressouche, Jean-Paul Rigault, Luis-Emiliano Sanchez.

This year Stars has continued the development of the SUP platform. This latter is the backbone of the team experiments to implement the new algorithms. We continue to improve our meta-modelling approach to support the development of video surveillance applications based on SUP. This year we have focused on metrics to drive dynamic architecture changes and on component management. We continue the development of a scenario analysis module (SAM) relying on formal methods to support activity recognition in SUP platform. We improve the CLEM toolkit and we rely on it to build SAM. Finally, we are improving the way we perform adaptation in the definition of a multiple services for device adaptive platform for scenario recognition.

The contributions for this research axis are:

- SUP (6.22)

- Model-Driven Engineering for Activity Recognition (6.23)

- Scenario Analysis Module (6.24)

- The Clem Workflow (6.25)

- Multiple Services for Device Adaptive Platform for Scenario Recognition (6.26)

6.2. Background Subtraction and People Detection in Videos

Participants: Vasanth Bathrinarayanan, Srinidhi Mukanahallipatna, Silviu Serban, François Brémond. Keywords: Background Subtraction, People detection, Automatic parameter selection for algorithm Background Subtraction Background subtraction is a vital real time low-level algorithm, which differentiates foreground and background objects in a video. We have thoroughly evaluated our Extended Gaussian Mixture model containing a shadows-removal algorithm, which performs better than other state of the art methods. Figure 10 shows the comparison of 13 background subtraction algorithms results on a challenging railway station monitoring video dataset from Project CENTAUR, which includes illumination change, shadows, occlusion and moving trains. Our algorithms performs the best in terms of result and with good

processing speed too. Figure 11 is an example of our background subtraction algorithm’s output on an indoor sequence of a surveillance footage from the Project SUPPORT. Ongoing research include automatic parameter selection for this algorithm based on some learnt context. Since

tuning the parameters is a daunting task for a non-experienced person, we try to learn some context information in a video like occlusion, contrast variation, density of foreground, texture etc. and map them to appropriate parameters of segmentation algorithm. Thus designing a controller to automatically adapt parameters of a algorithm as the scene context changes over time.

Figure 11. Background Subtraction result on a video to count number of people walking through the door after using their badge inside the terminal area (Project SUPPORT) -Autonomous Monitoring for Securing European Ports

People Detection

A new robust real-time person detection system was proposed [45] , which aims to serve as solid foundation for developing solutions at an elevated level of reliability. Our belief is that clever handling of input data correlated with efficacious training algorithms are key for obtaining top performance. A comprehensive training method on very large training database and based on random sampling that compiles optimal classifiers with minimal bias and overfit rate is used. Building upon recent advances in multi-scale feature computations, our approach attains state-of-the-art accuracy while running at high frame rate.

Our method combines detection techniques that greatly reduce computational time without compromising accuracy. We use efficient LBP and MCT features which we compute on integral images for optimal retrieval of rectangular region intensity and nominal scaling error. AdaBoost is used to create cascading classifiers with significantly reduced detection time. We further refine detection speed by using the soft cascades approach and by transferring all important computation from the detection stage to the training stage. Figure 12 shows some output samples from various datasets which it was tested on.

6.3. Tracking and Video Representation

Participants: Ratnesh Kumar, Guillaume Charpiat, Monique Thonnat. keywords: Fibers, Graph Partitioning, Message Passing, Iterative Conditional Modes, Video Segmentation, Video Inpainting Multiple Object Tracking The objective is to find trajectories of objects (belonging to a particular category) in a video. To find possible occupancy locations, an object detector is applied to all frames of a video, yielding bounding boxes. Detectors are not perfect and may provide false detections; they may also miss objects sometimes. We build a graph of all detections, and aim at partitioning the graph into object trajectories. Edges in the graph encode factors between detections, based on the following :

- Number of common point tracks between bounding boxes (the tracks are obtained from an opticalflow-based point tracker)

- Global appearance similarity (based on the pixel colors inside the bounding boxes)

- Trajectory straightness : for three bounding boxes at different frames, we compute the Laplacian (centered at the middle frame) of the centroids of the boxes.

• Repulsive constraint : Two detections in a same frame cannot belong to the same trajectory. We compute the partitions by using sequential tree re-weighted message passing (TRW-S). To avoid local minima, we use a label flipper motivated from the Iterative Conditional Modes algorithm. We apply our approach to typical surveillance videos where object of interest are humans. Comparative quantitative results can be seen in Tables 1 and 2 for two videos. The evaluation metrics considered are : Recall, Precision, Average False Alarms Per Frame (FAF), Number of Groundtruth Trajectories (GT), Number

of Mostly Tracked Trajectories, Number of Fragments (Frag), Number of Identity Switches (IDS), Multiple Object Tracking Accuracy (MOTA) and Multiple Object Tracking Precision (MOTP). This work has been submitted to CVPR’ 14.

Table 1. Towncenter Video Output

| Method | MOTA | MOTP | Detector |

|---|---|---|---|

| [59] (450-750) | 56.8 | 79.6 | HOG |

| Ours (450-750) | 53.5 | 69.1 | HOG |

Table 2. Comparison with recent proposed approaches on PETS S2L1 Video

| Method | Recall | Precision | FAF | GT | MT | Frag | IDS |

|---|---|---|---|---|---|---|---|

| [77] | 96.9 | 94.1 | 0.36 | 19 | 18 | 15 | 22 |

| Ours | 95.4 | 93.4 | 0.28 | 19 | 18 | 42 | 13 |

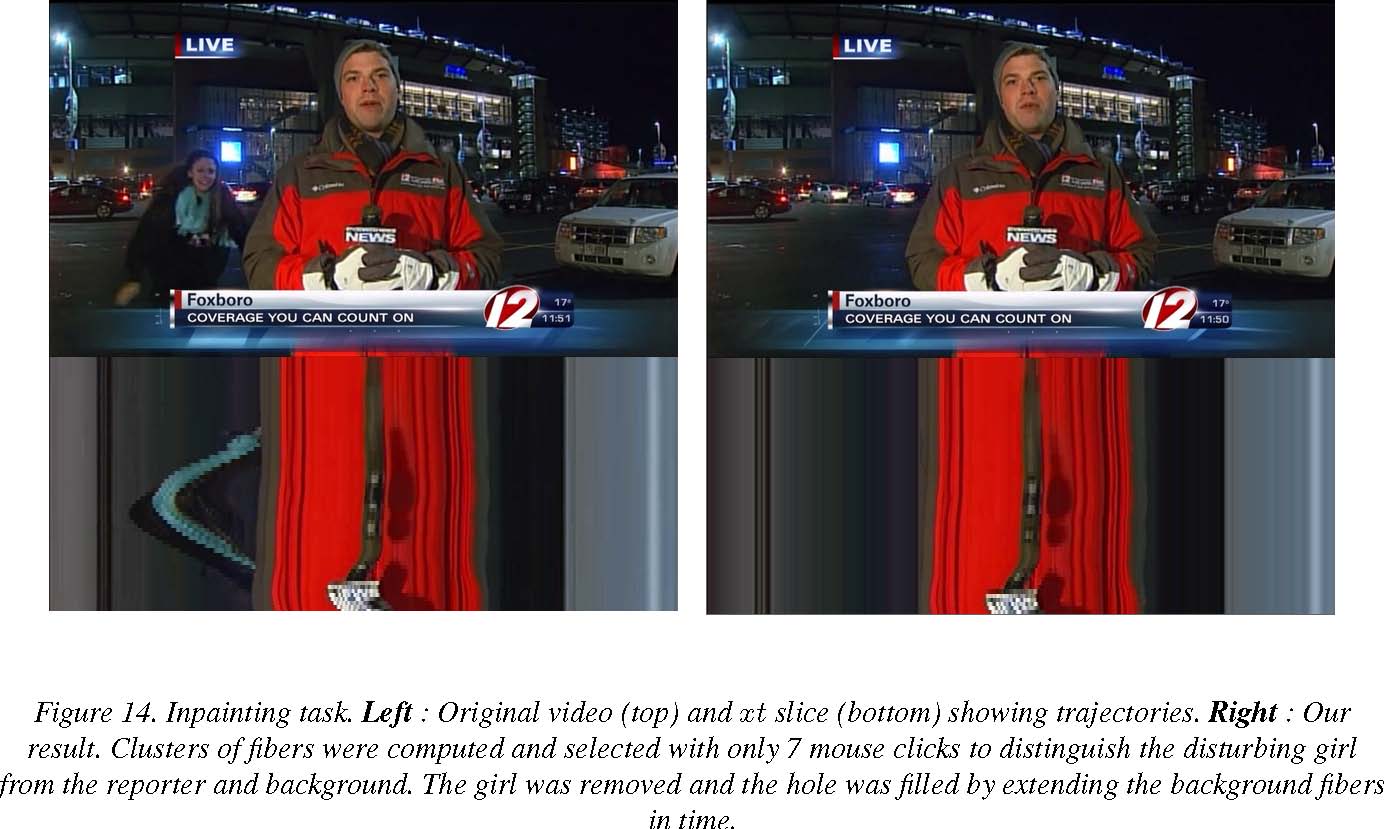

Video Representation We continued our work from the previous year on Fiber-Based Video Representation.

During this year we focused on obtaining competitive results with the state-of-the-art (Figure 13). The usefulness of our novel representation is demonstrated by a simple video inpainting task. Here a user input of only 7 clicks is required to remove the dancing girl disturbing the news reporter (Figure 14).

This work has been accepted for publication next year [41].

6.4. Video segmentation with shape constraint

Participant: Guillaume Charpiat. keywords: video segmentation, graph-cut, shape growth, shape statistics, shape prior, dynamic time warping

6.4.1. Video segmentation with growth constraint

This is joint work with Yuliya Tarabalka (Ayin Inria team) and Björn Menze (ETH Zurich, also MIT and collaborator of Asclepios Inria team).

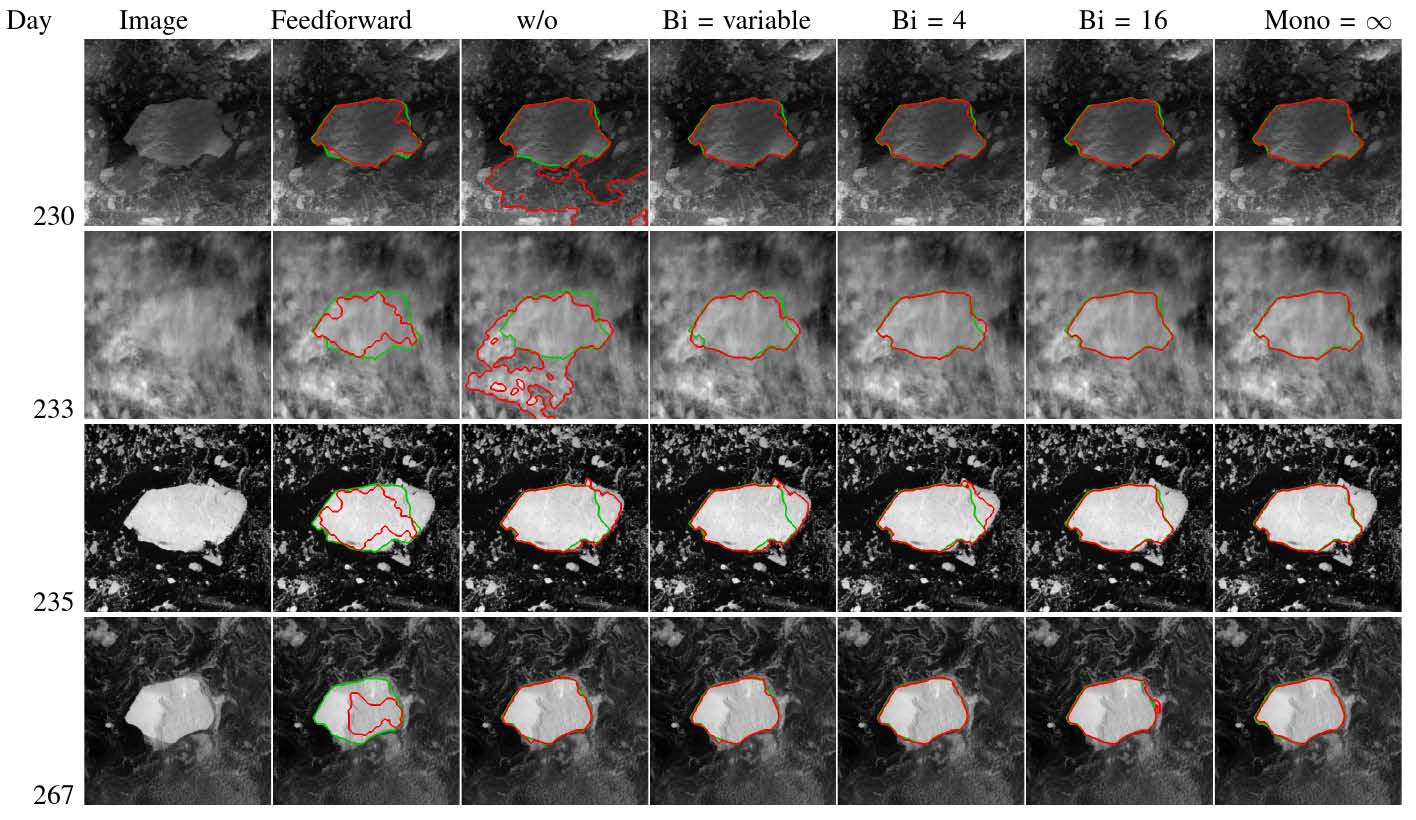

Context : One of the important challenges in computer vision is the automatic segmentation of objects in videos. This task becomes more difficult when image sequences are subject to low signal-to-noise ratio or low contrast between intensities of neighboring structures in the image scene. Such challenging data is acquired routinely, for example in medical imaging or in satellite remote sensing. While individual frames could be analyzed independently, temporal coherence in image sequences provides crucial information to make the problem easier. In this work, we focus on segmenting shapes in image sequences which only grow or shrink in time, and on making use of this knowledge as a constraint to help the segmentation process. Approach and applications : We had proposed last year an approach based on graph-cut (see Figure 15), able to obtain efficiently (linear time in the number of pixels in practice), for any given video, its globallyoptimal segmentation satisfying the growth constraint. This year we applied this method to three different applications :

• forest fires in satellite images, • organ development in medical imaging (brain tumor, in multimodal MRI 3D volumes),

• sea ice melting in satellite observation, with a shrinking constraint instead of growth (see Figure 16). The results on the first application were published in IGARSS (International Geoscience and Remote Sensing Symposium) [48], while the last two applications and the theory were published in BMCV [ 47 ]. A journal paper is also currently under review. A science popularization article was also published [53]. Not related but also with the Ayin Inria team was published the last of a series of articles about optimizers for point process

models [40], introducing graph-cuts in the multiple birth and death approach in order to detect numerous objects that should not overlap.

6.4.2. Video segmentation with statistical shape prior

This is joint work with Maximiliano Suster (leader of the Neural Circuits and Behaviour Group at Bergen

University, Norway).

Context : The zebrafish larva is a model organism widely used in biology to study genetics. Therefore, analyzing its behavior in video sequences is particularly important for this research field. For this, there is a need to segment the animal in the video, in order to estimate its speed, and also more precisely to extract its shape, in order to express for instance how much it is bent, how fast it bends, etc. However, as the animal is stimulated by the experimenter with a probe, the full zebrafish larva is not always visible because of occlusion.

Figure 18. First deformation modes of the shape prior used in the segmentation above.

Approach : We build a shape prior based on a training set of examples of non-occluded shapes, and use it to segment new images where the animal is occluded. This is however not straightforward.

- Building a training set of shape deformations : Given a set of training images containing nonoccluded animals, we extract their contours via multiple robust thresholdings and morphomathematical operations. For each contour, we then estimate automatically the location of the tip of the tail. We then compute point-to-point correspondences between all contours, using a modified version of Dynamic Time Warping, as well as the approximate tip location information. This is done in a translation-and rotation-invariant way.

- Building the shape prior : Based on these matchings, the mean shape is computed, as well as modes of deformation with PCA.

- Segmenting occluded images : Images with occluded shapes are pre-processed in a similar way to non-occluded ones; however, the resulted segmentation does not contain only the parts of the larva but also the probe, which has potentially similar colors and location, and is moving. To identify the

probe, whose shape depends on the video sequence, we make use of its rigidity and of temporal coherency. Then a segmentation criterion is designed to push an active contour towards the zones of interest (in a way that is robust to initialization), while keeping a shape which is feasible according to the shape prior.

Examples of data and results for a preliminary algorithm are shown in Figure 17, with the associated shape prior shown in Figure 18.

6.5. Articulating motion

Participant: Guillaume Charpiat.

keywords: shape evolution, metrics, gradient descent, Finsler gradient, Banach space, piecewise-rigidity, piecewise-similarity This is joint work with Giacomo Nardi, Gabriel Peyré and François-Xavier Vialard (Ceremade, Paris-Dauphine

University). Context in optimization : A fact which is often ignored when optimizing a criterion with a gradient descent is that the gradient of a quantity depends on the metric chosen. In many domains, people choose by default the underlying L2 metric, while it is not always relevant. Here we extend the set of metrics that can be considered, by building gradients for metrics that do not derive from inner products, with examples of metrics involving the L1 norm, possibly of a derivative. Mathematical foundations : This work introduces a novel steepest descent flow in Banach spaces. This extends previous works on generalized gradient descent, notably the work of Charpiat et al. [6], to the setting of Finsler metrics. Such a generalized gradient allows one to take into account a prior on deformations (e.g., piecewise rigid) in order to favor some specific evolutions. We define a Finsler gradient descent method to minimize a functional defined on a Banach space and we prove a convergence theorem for such a method. In particular, we show that the use of non-Hilbertian norms on Banach spaces is useful to study non-convex optimization problems where the geometry of the space might play a crucial role to avoid poor local minima. Application to shape evolution : We performed some applications to the curve matching problem. In particular, we characterized piecewise-rigid deformations on the space of curves and we studied several models to perform piecewise-rigid evolutions (see Figure 19). We also studied piecewise-similar evolutions. Piecewise-rigidity intuitively corresponds to articulated motions, while piecewise-similarity further allows the elastic stretching of each articulated part independently. One practical consequence of our work is that any deformation to be applied to a shape can be easily and optimally transformed into an articulated deformation with few articulations, the number and location of the articulations being not known in advance. Surprisingly, this problem is actually convex.

An article was submitted to the journal Interfaces and Free Boundaries [52].

6.6. Lossless image compression Participant: Guillaume Charpiat. keywords: image compression, entropy coding, graph-cut This is joint work with Yann Ollivier and Jamal Atif from the TAO Inria team. Context : Understanding, modelling, predicting and compressing images are tightly linked, in that any good predictor can be turned into a good compressor via entropy coding (such as Huffman coding or arithmetic coding). Indeed, with such techniques, the more predictable an event E is, i.e. the higher its probability p(E), the easier to compress it will be, with coding cost − log(p(E)). Therefore we are interested in image compression, in order to build better models of images.

Figure 19. Example of use of the Finsler gradient for the piecewise-rigid evolution of curves. Given an initial shape S and a target shape T , as well as a shape dissimilarity measure E(S)= Dissim(S, T ), any classical gradient descent on E(S) would draw the evolving shape S towards the target T . However the metric considered to compute the gradient changes the path followed. The top row is the evolution obtained with a Sobolev gradient H1 , which has the property of smoothing spatially the flow along the curve, to avoid irregular deformations. This is however not sufficient. The bottom row makes use of the Finsler gradient instead, with a metric favoring piecewise-rigid deformations.

MDL approach : The state-of-the-art sequential prediction of time series based on the advice of various experts combines the different expert predictions, with weights depending on their individual past performance (cf. Gilles Stoltz and Peter Grünwald’s work). This approach originates from the Minimum Description Length principle (MDL). This work was however designed for 1D data such as time series, and is not directly applicable to 2D data such as images. Consequently, our aim has been to adapt such an approach to the case of image compression, where time series are replaced with 2D series of pixel colors, and where experts are predictors of the color of a pixel given the colors of neighbors. New method and results : This year, we have focused on lossless greyscale image compression, and proposed to encode any image with two maps, one storing the choice of the expert made for each pixel, and one storing the encoding of the intensity of each pixel according to its expert. In order to compress efficiently the first map, we ask the choices of experts to be coherent in space, and then encode the boundaries of the experts’ areas. To find a suitable expert map, we optimize the total encoding cost explicitely, set as an energy minimization problem, solved with graph-cuts. An example of expert map obtained is shown in Figure 20. Preliminary results with a hierarchical ordering scheme already compete with standard techniques in lossless compression (PNG, lossless JPEG2000, JPEG-LS).

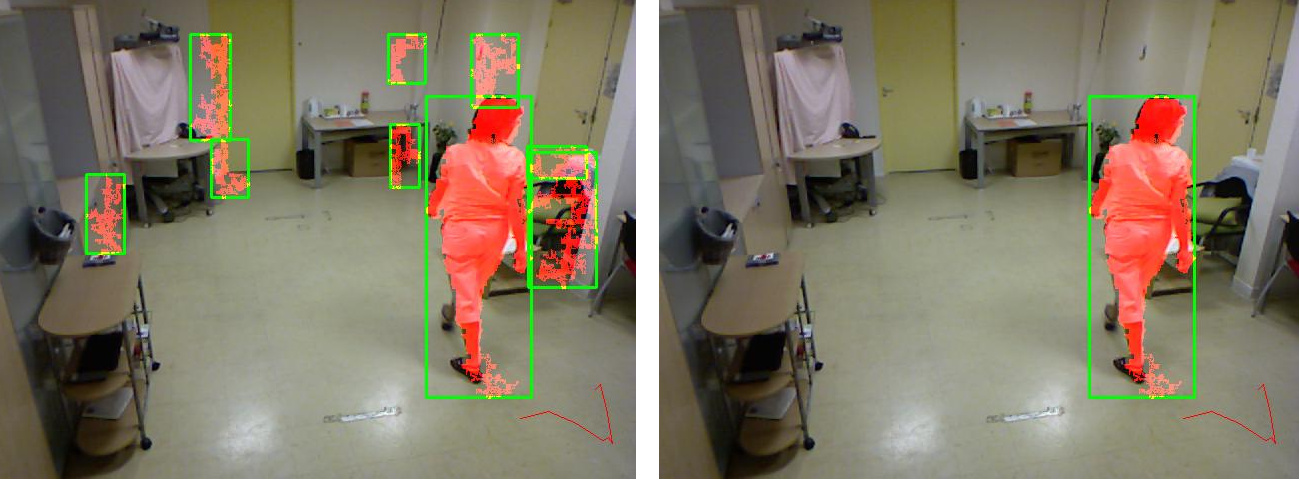

6.7. People detection using RGB-D cameras Participants: Anh-Tuan Nghiem, François Brémond. keywords: people detection, HOG, RGB-D cameras With the introduction of low cost RGB-D cameras like Kinect of Microsoft, video monitoring systems have another option for indoor monitoring beside conventional RGB cameras. Comparing with conventional RGB camera, reliable depth information from RGB-D cameras makes people detection easier. Besides that, constructors of RGB-D cameras also provide various libraries for people detection, skeleton detection or hand detection etc. However, perhaps due to high variance of depth measurement when objects are too far from the camera, these libraries only work when people are in the range of 0.5 to around 4.5 m from the cameras. Therefore, for our own video monitoring system, we construct our own people detection framework consisting of a background subtraction, a people classifier, a tracker and a noise removal component as illustrated in figure 21.

In this system, the background subtraction algorithm is designed specifically for depth data. Particularly, the algorithm employs temporal filters to detect noise related to imperfect depth measurement on some special surface.

Figure 21. The people detection framework

The people classification part is the extension of the work in [79]. From the foreground region provided by the background subtraction algorithm, the classification first searches for people head and then extracts HOG like features (Histogram of Oriented Gradient on binary image) above the head and the shoulder. Finally, these features are classified by a SVM classifier to recognise people.

The tracker links detected foreground regions in the current frame with the ones from previous frames. By linking objects in different frames, the tracker provides useful history information to remove noise as well as to improve the sensitivity of the people classifier.

Finally, the noise removal algorithm uses the object history constructed by the tracker to remove two types of noise: noise detected by temporal filter at the background subtraction algorithm and noise from high variance of depth measurement on objects far from the camera. Figure 22 illustrates the performance of noise removal on the detection results.

Figure 22. The people detection framework

The overall performance of our people detection framework is comparable to the one provided by Primesense,

the constructor of RGB-D camera Microsoft Kinect. Currently, we are doing extensive evaluation of the framework and the results will be submitted to a conference in the near future.

6.8. Online Tracking Parameter Adaptation based on Evaluation

Participants: Duc Phu Chau, Julien Badie, Kartick Subramanian, François Brémond, Monique Thonnat. Keywords: Object tracking, parameter tuning, online evaluation, machine learning

Several studies have been proposed for tracking mobile objects in videos [50]. For example we have proposed recently a new tracker which is based on co-inertia analysis (COIA) of object features [44]. However the parameter tuning is still a common issue for many trackers. In order to solve this problem, we propose an online parameter tuning process to adapt a tracking algorithm to various scene contexts. The proposed approach brings two contributions: (1) an online tracking evaluation, and (2) a method to adapt online tracking parameters to scene contexts.

In an offline training phase, this approach learns how to tune the tracker parameters to cope with different contexts. Different learning schemes (e.g. neural network-based) are proposed. A context database is created at the end of this phase to support the control process of the considered tracking algorithm. This database contains satisfactory parameter values of this tracker for various contexts.

In the online control phase, once the tracking quality is evaluated as not good enough, the proposed approach

computes the current context and tunes the tracking parameters using the learned values. The experimental results show that the proposed approach improves the performance of the tracking algorithm and outperforms recent state of the art trackers. Figure 23 shows the correct tracking results of four people while occlusions happen. Table 3 presents the tracking results of the proposed approach and of some recent trackers from the state of the art. The proposed controller increases significantly the performance of an appearance-based tracker [63]. We obtain the best MT value (i.e. mostly tracked trajectories) compared to state of the art trackers.

Figure 23. Tracking results of four people in the sequence ShopAssistant2cor (Caviar dataset) are correct, even when occlusions happen.

Table 3. Tracking results for the Caviar dataset. The proposed controller improves significantly the tracking performance. MT: Mostly tracked trajectories, higher is better. PT: Partially tracked trajectories. ML: Most lost trajectories, lower is better. The best values are printed in bold.

| Approaches | MT (%) | PT (%) | ML (%) |

|---|---|---|---|

| Xing et al. [92] | 84.3 | 12.1 | 3.6 |

| Li et al. [76] | 84.6 | 14.0 | 1.4 |

| Kuo et al. [74] | 84.6 | 14.7 | 0.7 |

| D.P Chau et al. [63] without the proposed approach | 78.3 | 16.0 | 5.7 |

| D.P Chau et al. [63] with the proposed approach | 85.5 | 9.2 | 5.3 |

This work has been published in [33], [ 34 ].

6.9. People Detection, Tracking and Re-identification Through a Video Camera Network

Participants: Malik Souded, François Brémond.

keywords: People detection, Object tracking, People re-identification, Region covariance descriptors, SIFT

descriptor, LogitBoost, Particle filters. This works aims at proposing a whole framework for people detection, tracking and re-identification through camera networks. Three main constraints have guided this work: high performances, real-time processing and genericity of the proposed methods (minimal human interaction/parametrization). This work is divided into three separate but dependent tasks:

6.9.1. People detection:

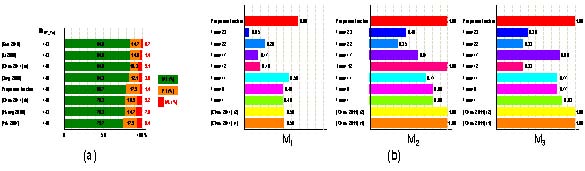

The proposed approach optimizes state-of-the-art methods [89], [ 93 ] which are based on training cascades of classifiers using the LogitBoost algorithm on region covariance descriptors. The optimization consists in clustering negative data before the training step, and speeds up both the training and detection processes while improving the detection performance. This approach has been published this year in [46]. The evaluation results and examples of detection are shown in Figures 24 and 25.

6.9.2. Object tracking:

The proposed object tracker uses a state-of-the-art background subtraction algorithm to initialize objects to track, with a collaboration of the proposed people detector in the case of people tracking. The object modelling is performed using SIFT features, detected and selected in a particular manner. The tracking process is performed at two levels: SIFT features are tracked using a specific particle filter, then object tracking is deduced from the tracked SIFT features using the proposed data association framework. A fast occlusion management is also proposed to achieve the object tracking process. The evaluation results are shown in Figure 26.

6.9.3. People re-identification:

A state-of-the-art method for people re-identification [67] is used as a baseline and its performance has been improved. A fast method for image alignment for multiple-shot case is proposed first. Then, texture information is added to the computed visual signatures. A method for people visible side classification is also proposed. Camera calibration information is used to filter candidate people who do not match spatio-temporal constraints. Finally, an adaptive feature weighting method according to visible side classification concludes the improvement contributions. The evaluation results are shown in Figure 27.

This work has been published in [28].

Figure 24. People detector evaluation and comparison on Inria, DaimlerChrysler, Caltech and CAVIAR datasets.

6.10. People Retrieval in a Network of Cameras

Participants: Sławomir B ˛ak, Marco San Biago, Ratnesh Kumar, Vasanth Bathrinarayanan, François Brémond.

keywords: Brownian statistics, re-identification, retrieval

Figure 25. Some examples of detection using the proposed people detector.

Figure 26. Object tracking evaluation on: (a) CAVIAR dataset using MT, PT and ML metrics. (b) ETI-VS1-BE-18-C4 sequence from ETISEO dataset, using ETISEO metrics.

Figure 27. People re-identification evaluation on VIPeR (left), iLids-119 (middle) and CAVIAR4REID (right) datasets.